A newly discovered vulnerability in GitLab’s AI-powered assistant, GitLab Duo, has exposed a serious risk: attackers could manipulate AI prompts to leak sensitive data, including source code and credentials. The flaw lies in how GitLab Duo interprets content within the DevOps platform, leaving it susceptible to indirect prompt injection.

🔍 What is GitLab Duo?

GitLab Duo integrates artificial intelligence into GitLab’s DevSecOps platform, enabling developers to get real-time code suggestions, reviews, and automation assistance. It’s built on Anthropic’s Claude AI, processing large amounts of contextual data from issues, merge requests, commits, and discussions.

🚨 The Vulnerability

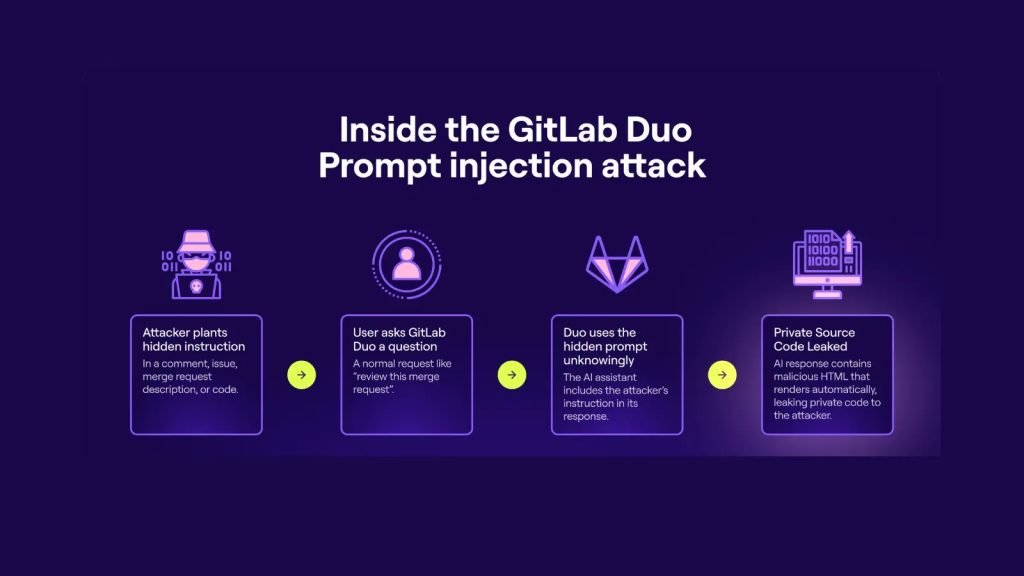

Security researchers at Legit Security identified that attackers could inject malicious prompt instructions into areas like:

- Merge request descriptions

- Issue comments

- Commit messages

- Source code files

When GitLab Duo processes these injected prompts, the AI is tricked into executing unintended instructions — including revealing private information, modifying code outputs, or generating malicious content.

🧪 HTML Injection Component

Researchers also found that AI responses could include HTML content, potentially allowing for redirection to malicious sites or script execution if not properly sanitized. This elevates the risk from passive information leakage to active exploitation.

🛡 GitLab’s Response

After receiving a responsible disclosure from Legit Security on February 12, 2025, GitLab acknowledged the vulnerability and quickly released a patch for the HTML injection aspect. The company is working to mitigate deeper risks tied to prompt injection and AI manipulation.

🔐 Risks at a Glance

- Exposure of private repositories and sensitive business logic

- Leakage of internal credentials or tokens

- Malicious code injection into CI/CD pipelines

- Targeted phishing through AI-generated links

✅ Best Practices for Users

- Regularly update your GitLab instance to the latest version

- Avoid using AI in production environments until better isolation is available

- Implement input validation for project content fields

- Review all AI-generated outputs before merging

📌 Final Thoughts

This vulnerability highlights the emerging threat landscape surrounding AI integrations in development pipelines. As powerful as tools like GitLab Duo are, they also bring a new attack surface that organizations must take seriously.

Security teams are encouraged to treat AI assistants as part of their software supply chain and enforce policies that ensure safe usage.

Original report from: CyberSecurityNews.com